Exhibition - To Flow Both Ways

FOCUS LAB, Troy Downtown, New York, The United States of America

During the semester, spring of 2022, I participated in a pop-up exhibition about the Hudson Waterfront organized by our school and an organization named the Future of Small Cities Institute. The studio was divided into groups, and mine was responsible for producing the exhibit section on the Hudson River's future of ecology and urban development. Unlike other group's poster narration, we preferred a more immersive approach to the presentation, using various technological tools to enhance the viewers experience and deliver the content in a more effective way.

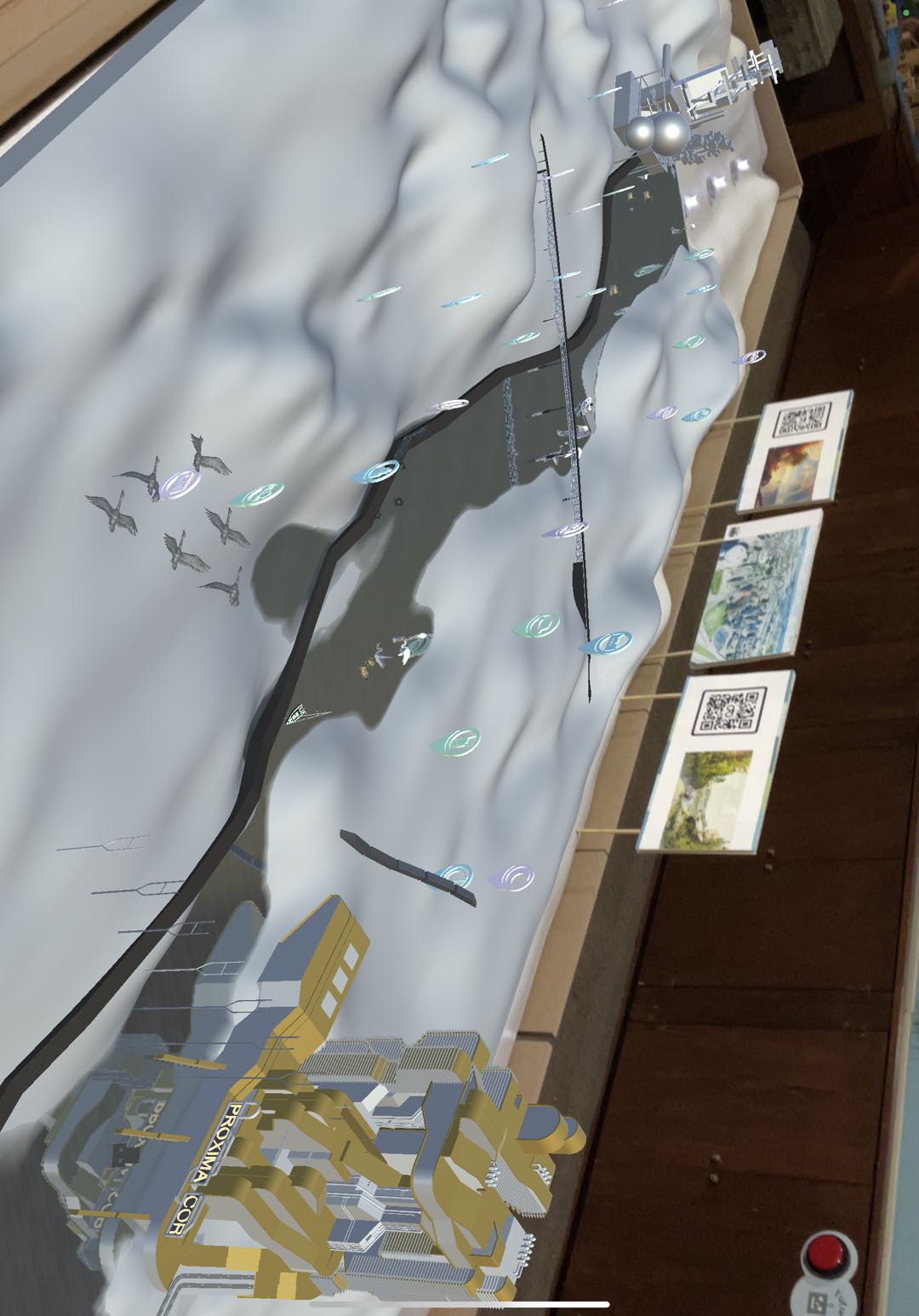

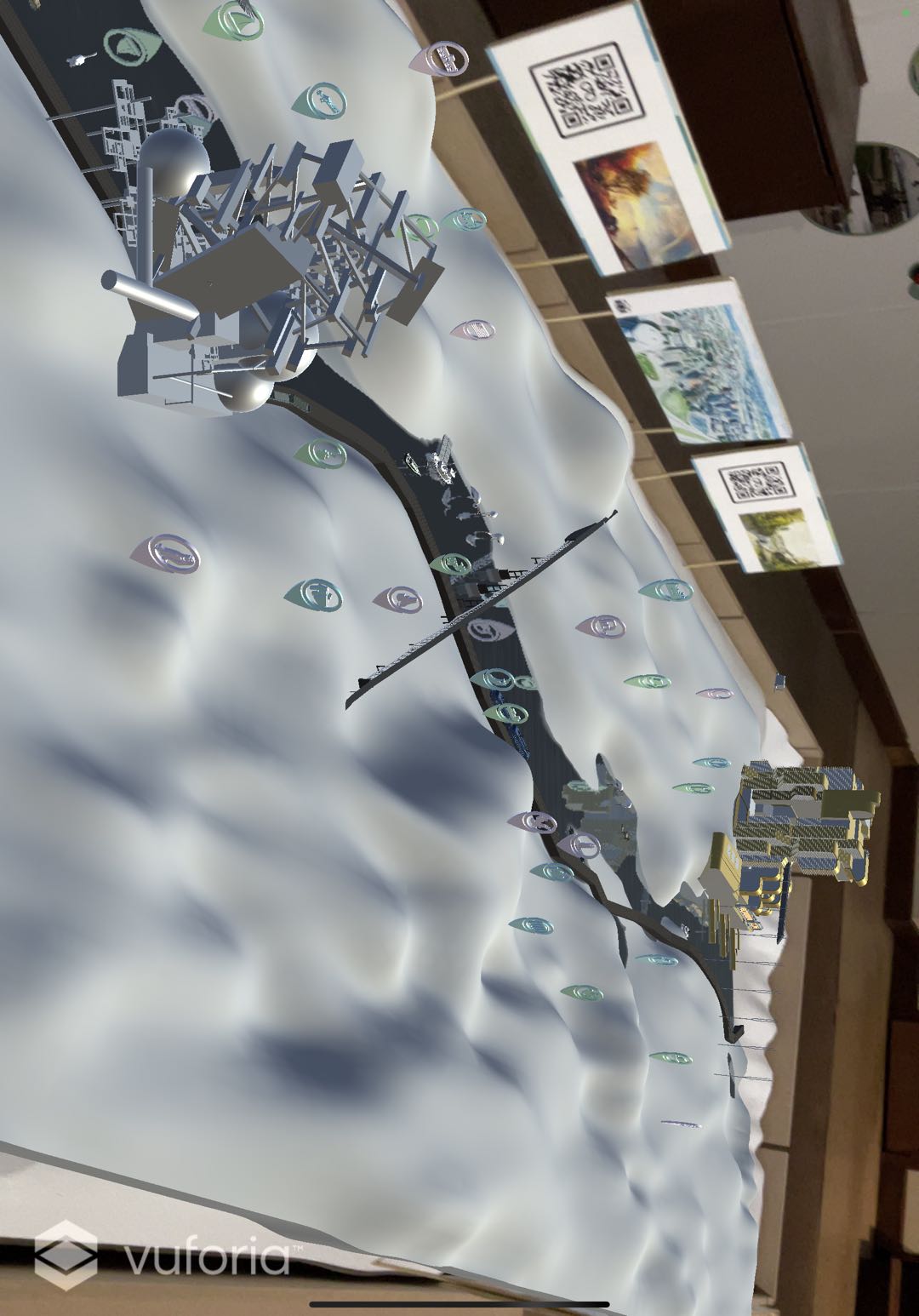

Initially, we relied on a small physical model so that people could sense the three-dimensional object, but this tactile experience was not satisfactory as a model is an inanimate object with a single experience. So, I suggested presenting our project in AR by designing a scannable topographic model. I was elected as the team leader as I presented this innovative idea.

I divided the work so that my group members would gather information about various cities' coastal habitats and industrialization degrees. They were also tasked with looking for 3D animal digital models in different habitats and creating the topographic model. I led some other teammates to discuss the future architectural design of industrialized cities along the Hudson River in RPI's Center for Architecture Science and Ecology (CASE). The outcome of this effort resulted in two different projects for the industrial architecture of future cities.

I was in charge of producing the animation of animals, nature, and urban transportation as no one else had the skills to do it. It included some 3D animations included historical trains along the Hudson River, futuristic flying trains, birds flying in the sky, modern boats and future solar-powered boats, along with many other elements. Finally, I also assembled the text we collected about the different habitats, animals, and cities into floating icons in different locations in the AR model. In this way, the viewer can see all the 3D animations while scanning the physical model with the iPad and also tap on the icons to see the specific information. This project added to the interactivity of our project so that the public could immerse rather than just "look" at the material. During this process, I used tools like Rhino 3D for modeling, Blender for animation, and Unity and Vuforia from AR platforms.

More Information: